Hi Daria

Thanks for your fast response.

Yes, I agree that is the most likely cause. I have setup Chariot MQTT client and it parses the same MQTT messages (received from HiveMQ) as SparkplugB:

Also tries without using Alias.

The version is SparkplugB 3.0.0

Here is the class used for serialization:

// <auto-generated>

// This file was generated by a tool; you should avoid making direct changes.

// Consider using 'partial classes' to extend these types

// Input: my.proto

// </auto-generated>

#region Designer generated code

#pragma warning disable CS0612, CS0618, CS1591, CS3021, IDE0079, IDE1006, RCS1036, RCS1057, RCS1085, RCS1192

#nullable enable

namespace SparkplugNet.VersionB.ProtoBuf

{

[global::ProtoBuf.ProtoContract()]

internal partial class ProtoBufPayload : global::ProtoBuf.IExtensible

{

private global::ProtoBuf.IExtension? __pbn__extensionData;

global::ProtoBuf.IExtension global::ProtoBuf.IExtensible.GetExtensionObject(bool createIfMissing)

=> global::ProtoBuf.Extensible.GetExtensionObject(ref __pbn__extensionData, createIfMissing);

[global::ProtoBuf.ProtoMember(1, Name = @"timestamp")]

public ulong Timestamp { get; set; }

[global::ProtoBuf.ProtoMember(2, Name = @"metrics")]

public global::System.Collections.Generic.List<Metric> Metrics { get; set; } = new global::System.Collections.Generic.List<Metric>();

// Begin FLS: 14072023/1. IsRequired = true added for compliance with Ignition

[global::ProtoBuf.ProtoMember(3, Name = @"seq", IsRequired = true)]

// End FLS: 14072023/1.

public ulong Seq { get; set; }

[global::ProtoBuf.ProtoMember(4, Name = @"uuid")]

[global::System.ComponentModel.DefaultValue("")]

public string Uuid { get; set; } = string.Empty;

[global::ProtoBuf.ProtoMember(5, Name = @"body")]

public byte[]? Body { get; set; } = Array.Empty<byte>();

[global::ProtoBuf.ProtoMember(6, Name = @"details")]

public global::System.Collections.Generic.List<byte> Details { get; set; } = new global::System.Collections.Generic.List<byte>();

[global::ProtoBuf.ProtoContract()]

internal partial class Template : global::ProtoBuf.IExtensible

{

private global::ProtoBuf.IExtension? __pbn__extensionData;

global::ProtoBuf.IExtension global::ProtoBuf.IExtensible.GetExtensionObject(bool createIfMissing)

=> global::ProtoBuf.Extensible.GetExtensionObject(ref __pbn__extensionData, createIfMissing);

[global::ProtoBuf.ProtoMember(1, Name = @"version")]

[global::System.ComponentModel.DefaultValue("")]

public string Version { get; set; } = string.Empty;

[global::ProtoBuf.ProtoMember(2, Name = @"metrics")]

public global::System.Collections.Generic.List<Metric> Metrics { get; set; } = new global::System.Collections.Generic.List<Metric>();

[global::ProtoBuf.ProtoMember(3, Name = @"parameters")]

public global::System.Collections.Generic.List<Parameter> Parameters { get; set; } = new global::System.Collections.Generic.List<Parameter>();

[global::ProtoBuf.ProtoMember(4, Name = @"template_ref")]

[global::System.ComponentModel.DefaultValue("")]

public string TemplateRef { get; set; } = string.Empty;

[global::ProtoBuf.ProtoMember(5, Name = @"is_definition")]

public bool IsDefinition { get; set; }

[global::ProtoBuf.ProtoMember(6, Name = @"details")]

public global::System.Collections.Generic.List<byte> Details { get; set; } = new global::System.Collections.Generic.List<byte>();

[global::ProtoBuf.ProtoContract()]

internal partial class Parameter : global::ProtoBuf.IExtensible

{

private global::ProtoBuf.IExtension? __pbn__extensionData;

global::ProtoBuf.IExtension global::ProtoBuf.IExtensible.GetExtensionObject(bool createIfMissing)

=> global::ProtoBuf.Extensible.GetExtensionObject(ref __pbn__extensionData, createIfMissing);

[global::ProtoBuf.ProtoMember(1, Name = @"name")]

[global::System.ComponentModel.DefaultValue("")]

public string Name { get; set; } = string.Empty;

[global::ProtoBuf.ProtoMember(2, Name = @"type")]

public uint Type { get; set; }

[global::ProtoBuf.ProtoMember(3, Name = @"int_value")]

public uint IntValue

{

get => __pbn__value.Is(3) ? __pbn__value.UInt32 : default;

set => __pbn__value = new global::ProtoBuf.DiscriminatedUnion64Object(3, value);

}

public bool ShouldSerializeIntValue() => __pbn__value.Is(3);

public void ResetIntValue() => global::ProtoBuf.DiscriminatedUnion64Object.Reset(ref __pbn__value, 3);

private global::ProtoBuf.DiscriminatedUnion64Object __pbn__value;

[global::ProtoBuf.ProtoMember(4, Name = @"long_value")]

public ulong LongValue

{

get => __pbn__value.Is(4) ? __pbn__value.UInt64 : default;

set => __pbn__value = new global::ProtoBuf.DiscriminatedUnion64Object(4, value);

}

public bool ShouldSerializeLongValue() => __pbn__value.Is(4);

public void ResetLongValue() => global::ProtoBuf.DiscriminatedUnion64Object.Reset(ref __pbn__value, 4);

[global::ProtoBuf.ProtoMember(5, Name = @"float_value")]

public float FloatValue

{

get => __pbn__value.Is(5) ? __pbn__value.Single : default;

set => __pbn__value = new global::ProtoBuf.DiscriminatedUnion64Object(5, value);

}

public bool ShouldSerializeFloatValue() => __pbn__value.Is(5);

public void ResetFloatValue() => global::ProtoBuf.DiscriminatedUnion64Object.Reset(ref __pbn__value, 5);

[global::ProtoBuf.ProtoMember(6, Name = @"double_value")]

public double DoubleValue

{

get => __pbn__value.Is(6) ? __pbn__value.Double : default;

set => __pbn__value = new global::ProtoBuf.DiscriminatedUnion64Object(6, value);

}

public bool ShouldSerializeDoubleValue() => __pbn__value.Is(6);

public void ResetDoubleValue() => global::ProtoBuf.DiscriminatedUnion64Object.Reset(ref __pbn__value, 6);

[global::ProtoBuf.ProtoMember(7, Name = @"boolean_value")]

public bool BooleanValue

{

get => __pbn__value.Is(7) ? __pbn__value.Boolean : default;

set => __pbn__value = new global::ProtoBuf.DiscriminatedUnion64Object(7, value);

}

public bool ShouldSerializeBooleanValue() => __pbn__value.Is(7);

public void ResetBooleanValue() => global::ProtoBuf.DiscriminatedUnion64Object.Reset(ref __pbn__value, 7);

[global::ProtoBuf.ProtoMember(8, Name = @"string_value")]

[global::System.ComponentModel.DefaultValue("")]

public string StringValue

{

get => __pbn__value.Is(8) ? ((string)__pbn__value.Object) : "";

set => __pbn__value = new global::ProtoBuf.DiscriminatedUnion64Object(8, value);

}

public bool ShouldSerializeStringValue() => __pbn__value.Is(8);

public void ResetStringValue() => global::ProtoBuf.DiscriminatedUnion64Object.Reset(ref __pbn__value, 8);

[global::ProtoBuf.ProtoMember(9, Name = @"extension_value")]

public ParameterValueExtension ExtensionValue

{

get => __pbn__value.Is(9) ? ((ParameterValueExtension)__pbn__value.Object) : new();

set => __pbn__value = new global::ProtoBuf.DiscriminatedUnion64Object(9, value);

}

public bool ShouldSerializeExtensionValue() => __pbn__value.Is(9);

public void ResetExtensionValue() => global::ProtoBuf.DiscriminatedUnion64Object.Reset(ref __pbn__value, 9);

public ValueOneofCase ValueCase => (ValueOneofCase)__pbn__value.Discriminator;

public enum ValueOneofCase

{

None = 0,

IntValue = 3,

LongValue = 4,

FloatValue = 5,

DoubleValue = 6,

BooleanValue = 7,

StringValue = 8,

ExtensionValue = 9,

}

[global::ProtoBuf.ProtoContract()]

internal partial class ParameterValueExtension : global::ProtoBuf.IExtensible

{

private global::ProtoBuf.IExtension? __pbn__extensionData;

global::ProtoBuf.IExtension global::ProtoBuf.IExtensible.GetExtensionObject(bool createIfMissing)

=> global::ProtoBuf.Extensible.GetExtensionObject(ref __pbn__extensionData, createIfMissing);

[global::ProtoBuf.ProtoMember(1, Name = @"extensions")]

public global::System.Collections.Generic.List<byte> Extensions { get; set; } = new global::System.Collections.Generic.List<byte>();

}

}

}

We are using SparkplugNet but had to compile our own version to be compatible with Ignition. We made the following correction:

// Begin FLS: 14072023/1. IsRequired = true added for compliance with Ignition

[global::ProtoBuf.ProtoMember(3, Name = @"seq", IsRequired = true)]

// End FLS: 14072023/1.

public ulong Seq { get; set; }

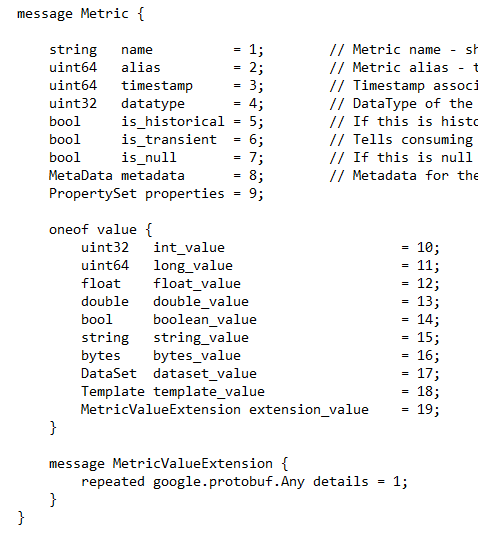

I have found the underlying proto file, but I cant attach it:

// * Copyright (c) 2015, 2018 Cirrus Link Solutions and others

// *

// * This program and the accompanying materials are made available under the

// * terms of the Eclipse Public License 2.0 which is available at

// * http://www.eclipse.org/legal/epl-2.0.

// *

// * SPDX-License-Identifier: EPL-2.0

// *

// * Contributors:

// * Cirrus Link Solutions - initial implementation

//

// To compile:

// cd client_libraries/c_sharp

// protoc --proto_path=../../ --csharp_out=src --csharp_opt=base_namespace=Org.Eclipse.Tahu.Protobuf ../../sparkplug_b/sparkplug_b_c_sharp.proto

//

syntax = "proto3";

import "google/protobuf/any.proto";

package org.eclipse.tahu.protobuf;

option java_package = "org.eclipse.tahu.protobuf";

option java_outer_classname = "SparkplugBProto";

message Payload {

/*

// Indexes of Data Types

// Unknown placeholder for future expansion.

Unknown = 0;

// Basic Types

Int8 = 1;

Int16 = 2;

Int32 = 3;

Int64 = 4;

UInt8 = 5;

UInt16 = 6;

UInt32 = 7;

UInt64 = 8;

Float = 9;

Double = 10;

Boolean = 11;

String = 12;

DateTime = 13;

Text = 14;

// Additional Metric Types

UUID = 15;

DataSet = 16;

Bytes = 17;

File = 18;

Template = 19;

// Additional PropertyValue Types

PropertySet = 20;

PropertySetList = 21;

*/

message Template {

message Parameter {

string name = 1;

uint32 type = 2;

oneof value {

uint32 int_value = 3;

uint64 long_value = 4;

float float_value = 5;

double double_value = 6;

bool boolean_value = 7;

string string_value = 8;

ParameterValueExtension extension_value = 9;

}

message ParameterValueExtension {

repeated google.protobuf.Any extensions = 1;

}

}

string version = 1; // The version of the Template to prevent mismatches

repeated Metric metrics = 2; // Each metric is the name of the metric and the datatype of the member but does not contain a value

repeated Parameter parameters = 3;

string template_ref = 4; // Reference to a template if this is extending a Template or an instance - must exist if an instance

bool is_definition = 5;

repeated google.protobuf.Any details = 6;

}

message DataSet {

message DataSetValue {

oneof value {

uint32 int_value = 1;

uint64 long_value = 2;

float float_value = 3;

double double_value = 4;

bool boolean_value = 5;

string string_value = 6;

DataSetValueExtension extension_value = 7;

}

message DataSetValueExtension {

repeated google.protobuf.Any details = 1;

}

}

message Row {

repeated DataSetValue elements = 1;

repeated google.protobuf.Any details = 2;

}

uint64 num_of_columns = 1;

repeated string columns = 2;

repeated uint32 types = 3;

repeated Row rows = 4;

repeated google.protobuf.Any details = 5;

}

message PropertyValue {

uint32 type = 1;

bool is_null = 2;

oneof value {

uint32 int_value = 3;

uint64 long_value = 4;

float float_value = 5;

double double_value = 6;

bool boolean_value = 7;

string string_value = 8;

PropertySet propertyset_value = 9;

PropertySetList propertysets_value = 10; // List of Property Values

PropertyValueExtension extension_value = 11;

}

message PropertyValueExtension {

repeated google.protobuf.Any details = 1;

}

}

message PropertySet {

repeated string keys = 1; // Names of the properties

repeated PropertyValue values = 2;

repeated google.protobuf.Any details = 3;

}

message PropertySetList {

repeated PropertySet propertyset = 1;

repeated google.protobuf.Any details = 2;

}

message MetaData {

// Bytes specific metadata

bool is_multi_part = 1;

// General metadata

string content_type = 2; // Content/Media type

uint64 size = 3; // File size, String size, Multi-part size, etc

uint64 seq = 4; // Sequence number for multi-part messages

// File metadata

string file_name = 5; // File name

string file_type = 6; // File type (i.e. xml, json, txt, cpp, etc)

string md5 = 7; // md5 of data

// Catchalls and future expansion

string description = 8; // Could be anything such as json or xml of custom properties

repeated google.protobuf.Any details = 9;

}

message Metric {

string name = 1; // Metric name - should only be included on birth

uint64 alias = 2; // Metric alias - tied to name on birth and included in all later DATA messages

uint64 timestamp = 3; // Timestamp associated with data acquisition time

uint32 datatype = 4; // DataType of the metric/tag value

bool is_historical = 5; // If this is historical data and should not update real time tag

bool is_transient = 6; // Tells consuming clients such as MQTT Engine to not store this as a tag

bool is_null = 7; // If this is null - explicitly say so rather than using -1, false, etc for some datatypes.

MetaData metadata = 8; // Metadata for the payload

PropertySet properties = 9;

oneof value {

uint32 int_value = 10;

uint64 long_value = 11;

float float_value = 12;

double double_value = 13;

bool boolean_value = 14;

string string_value = 15;

bytes bytes_value = 16; // Bytes, File

DataSet dataset_value = 17;

Template template_value = 18;

MetricValueExtension extension_value = 19;

}

message MetricValueExtension {

repeated google.protobuf.Any details = 1;

}

}

uint64 timestamp = 1; // Timestamp at message sending time

repeated Metric metrics = 2; // Repeated forever - no limit in Google Protobufs

uint64 seq = 3; // Sequence number

string uuid = 4; // UUID to track message type in terms of schema definitions

bytes body = 5; // To optionally bypass the whole definition above

repeated google.protobuf.Any details = 6;

}

We have also passed the SparkplugB 3.0.0 Node certification tests.

Best regards

Henrik